Send With Confidence

Partner with the email service trusted by developers and marketers for time-savings, scalability, and delivery expertise.

Time to read: 31 minutes

Note: This is a post from #frontend@twiliosendgrid. For other engineering posts, head over to the technical blog roll.

Across all of our frontend apps, we had, and still have, the following goal: provide a way to write consistent, debuggable, maintainable, and valuable E2E (end to end) automation tests for our frontend applications and integrate with CICD (continuous integration and continuous deployment).

To reach the state we have today in which hundreds of E2E tests are possibly triggered or running on a schedule across all of our frontend teams across SendGrid, we had to research and experiment with a lot of potential solutions along the way until we accomplished that main goal.

We attempted to roll our own custom Ruby Selenium solution developed by dedicated test engineers called SiteTestUI aka STUI, in which all teams could contribute to one repo and run cross-browser automation tests. It sadly succumbed to slow, flaky tests, false alarms, lack of context between repos and languages, too many hands in one basket, painful debugging experiences, and more time spent on maintenance than providing value.

We then experimented with another promising library in WebdriverIO to write tests in JavaScript colocated with each team’s application repos. While this solved some problems, empowered more team ownership, and allowed us to integrate tests with Buildkite, our CICD provider, we still had flaky tests that were painful to debug and tough to write in addition to dealing with all too similar Selenium bugs and quirks.

We wanted to avoid another STUI 2.0 and started to explore other options. If you would like to read more about our lessons learned and strategies discovered along the way when migrating from STUI to WebdriverIO, check out part 1 of the blog post series.

In this final part two of the blog post series, we will cover our journey from STUI and WebdriverIO to Cypress and how we went through similar migrations in setting up the overall infrastructure, writing organized E2E tests, integrating with our Buildkite pipelines, and scaling to other frontend teams in the organization.

TLDR: We adopted Cypress over STUI and WebdriverIO and we accomplished all of our goals of writing valuable E2E tests to integrate with CICD. Plenty of our work and lessons learned from WebdriverIO and STUI carried over nicely into how we use and integrate Cypress tests today.

When we searched for alternatives to WebdriverIO, we saw other Selenium wrappers like Protractor and Nightwatch with a similar feature set to WebdriverIO, but we felt we would most likely run into long setups, flaky tests, and tedious debugging and maintenance down the road.

We thankfully stumbled onto a new E2E testing framework called Cypress, which showcased quick setups, fast and debuggable tests ran in the browser, network layer request stubbing, and most importantly, not using Selenium under the hood.

We marveled at awesome features like video recordings, the Cypress GUI, paid Dashboard Service, and parallelization to try out. We were willing to compromise on cross-browser support in favor of valuable tests passing consistently against Chrome with a repertoire of tools at our disposal to implement, debug, and maintain the tests for our developers and QAs.

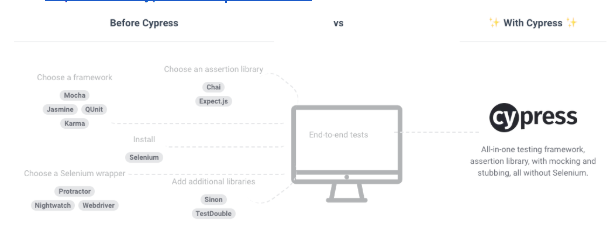

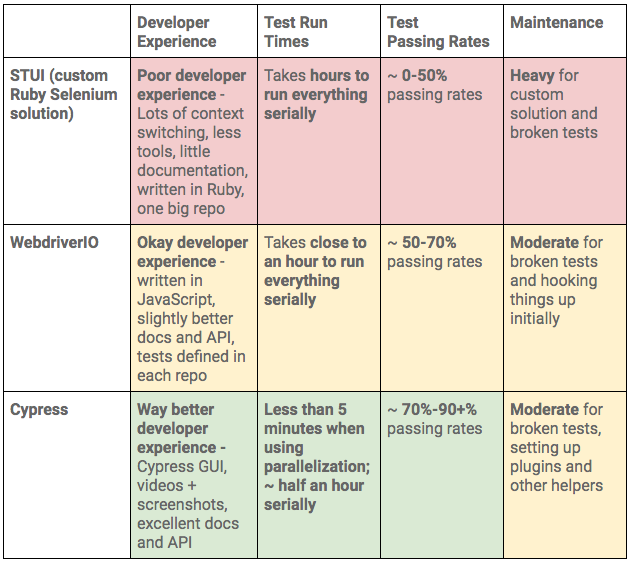

We also appreciated the testing frameworks, assertion libraries, and all the other tools chosen for us to have a more standardized approach to tests across all of our frontend teams. Below, we provided a screenshot of the differences between a solution like WebdriverIO and Cypress and if you would like to see more of the differences between Cypress and Selenium solutions, you can check out their documentation on how it works.

With that in mind, we committed to testing out Cypress as another solution for writing fast, consistent, and debuggable E2E tests to be eventually integrated with Buildkite during CICD. We also deliberately set another goal of comparing Cypress against the previous Selenium-based solutions to ultimately determine by data points if we should keep looking or build our test suites with Cypress going forward. We planned to convert existing WebdriverIO tests and any other high priority tests still in STUI over to Cypress tests and compare our developer experiences, velocity, stability, test run times, and maintenance of the tests.

When switching over from STUI and WebdriverIO to Cypress, we tackled it systematically through the same high-level strategy we used when we attempted our migration from STUI to WebdriverIO in our frontend application repos. For more detail on how we accomplished such steps for WebdriverIO, please refer to part 1of the blog post series. The general steps for transitioning to Cypress involved the following:

In order to accomplish our secondary goals, we also added extra steps to compare Cypress with the prior Selenium solutions and to eventually scale Cypress across all the frontend teams in the organization:

6. Comparing Cypress in terms of developer experiences, velocity, and stability of the tests vs. WebdriverIO and STUI 7. Scaling to other frontend teams

To get up and running quickly with Cypress, all we had to do was `npm install cypress` into our projects and start up Cypress for the first time for it to be automatically laid out with a `cypress.json` configuration file and cypress folder with starter fixtures, tests, and other setup files for commands and plugins. We appreciated how Cypress came bundled with Mocha as the test runner, Chai for assertions, and Chai-jQuery and Sinon-Chai for even more assertions to use and chain off of. We no longer had to spend considerable time to research about what test runner, reporter, assertions, and service libraries to install and use in comparison to when we first started with WebdriverIO or STUI. We immediately ran some of the generated tests with their Cypress GUI and explored the many debugging features at our disposal such as time travel debugging, selector playground, recorded videos, screenshots, command logs, browser developer tools, etc.

We also set it up later with Eslint and TypeScript for extra static type checking and formatting rules to follow when committing new Cypress test code. We initially had some hiccups with TypeScript support and some files needing to be JavaScript files like those centered around the plugins files, but for the most part we were able to type check the majority of our files for our tests, page objects, and commands.

Here is an example folder structure that one of our frontend teams followed to incorporate page objects, plugins, and commands:

--config or --env to accomplish our use cases.

http://127.0.0.1:8000 or against the deployed staging app URL, we adjusted the baseUrl config flag in the command and added extra environment variables such as testEnv to help load up certain fixtures or environment specific test data in our Cypress tests. For example, API keys used, users created, and other resources may be different across environments. We utilized testEnv to toggle those fixtures or add special conditional logic if some features were not supported in an environment or test setup differed and we would access the environment through a call like Cypress.env(“testEnv”) in our specs.

cypress:open:* to represent opening up the Cypress GUI for us to select our tests to run through the UI when we locally developed and cypress:run:* to denote executing tests in headless mode, which was more tailored for running in a Docker container during CICD. What came after open or run would be the environment so our commands would read easily like npm run cypress:open:localhost:staging to open up the GUI and run tests against a local Webpack dev server pointing to staging APIs or npm run cypress:run:staging to run tests in headless mode against the deployed staging app and API. The package.json Cypress scripts came out like this:

cypress/plugins/index.js file to have a base cypress.json file and separate configuration files that would be read based on an environment variable called configFile to load up a specific configuration file. The loaded configuration files would then be merged with the base file to eventually point against a staging or a mock backend server.

cypress.json file that included shared properties for general timeouts, project ID to hook up with the Cypress Dashboard Service which we will talk about later, and other settings as shown underneath:

config folder in the cypress folder to hold each of our configuration files such as localhostMock.json to run our local Webpack dev server against a local mock API server or staging.json to run against the deployed staging app and API. These configuration files to be diffed and merged with the base config looked like this:

cypress/plugins/index.js file, we added logic to read an environment variable called configFile set from a Cypress command that would eventually read the corresponding file in the config folder and merge it with the base cypress.json such as below:

Makefile that resembled the following:

make cypress_open_staging or make cypress_run_staging in our `package.json` npm scripts.

configFile for which environment configuration file to load up, BASE_URL to visit our pages, API_HOST for different backend environments, or SPECS to determine which tests to run before we kick off any of the Makefile commands.

package.json scripts section, though this was not necessary if someone wanted to only use the Makefile, and it would look like the following:

package.json more streamlined as a result. Most importantly, we could see at a glance right away all the Cypress commands related to the mock server and local Webpack dev server versus the staging environments and which ones are “opening” up the GUI rather than “running” in headless mode.

$ or browser with cy or Cypress i.e.visiting a page through $(“.button”).click() to cy.get(“.button”).click(), browser.url() to cy.visit(), or $(“.input”).setValue() to cy.get(“.input”).type()$ or $$ usually turned into a cy.get(...) or cy.contains(...) i.e. $$(“.multiple-elements-selector”) or $(“.single-element-selector”) turned into cy.get(“.any-element-selector”), cy.contains(“text”), or cy.contains(“.any-selector”)$(“.selector”).waitForVisible(timeoutInMs), $(“.selector”).waitUntil(...), or $(“.selector”).waitForExist() calls in favor of letting Cypress by default handle the retries and retrieving of the elements over and over with cy.get(‘.selector’) and cy.contains(textInElement). If we needed a longer timeout than the default, we would use cy.get(‘.selector’, { timeout: longTimeoutInMs }) altogether and then after retrieving the element we would chain the next action command to do something with the element i.e. cy.get(“.selector”).click().addCommand(‘customCommand, () => {})` turned into `Cypress.Commands.add(‘customCommand’, () => {}) and doing `cy.customCommand()`node-fetch and wrapping it in browser.call(() => return fetch(...)) and/or browser.waitUntil(...) led to making HTTP requests in a Cypress Node server through cy.request(endpoint) or a custom plugin we defined and made calls like cy.task(taskToHitAPIOrService).browser.pause(timeoutInMs), but with Cypress we improved that with the network stubbing functionality and were able to listen and wait for the specific request to finish with cy.server(), cy.route(“method”, “/endpoint/we/are/waiting/for).as(“endpoint”)`, and `cy.wait(“@endpoint”) before kicking off the action that would trigger the request.open() functionality to be shared across all pages.

open() functionality with its page route, and provide any helper functionality as shown below.

cy.login command, set a cookie to keep the user logged in, make the API calls necessary to return the user to the desired starting state through either cy.request(“endpoint”) or cy.task(“pluginAction”) calls, and visit the authenticated page we sought to test directly. Then, we would automate the steps to accomplish a user feature flow as shown in the test layout below.

cy.login(), and logging out, cy.logout()? We implemented them easily in Cypress in this way so all of our tests would login to a user through the API in the same way.

cy.task(“pluginAction”) commands to use some libraries within the Cypress Node server to connect to a test email IMAP client/inbox such as SquirrelMail to check for matching emails in an inbox after prompting an action in the UI and for following redirect links from those emails back into our web app domain to verify certain download pages showed up and effectively completing a whole customer flow. We implemented plugins that would wait for emails to arrive in the SquirrelMail inbox given certain subject lines, delete emails, send emails, trigger email events, polling backend services, and doing much more useful setups and teardowns through the API for our tests to use.

npm run cypress:run:staging because those machines do not have Node, browsers, our application code, or any other dependencies to actually run the Cypress tests. When we set up WebdriverIO before, we needed to assemble three separate services in a Docker Compose file to have the proper Selenium, Chrome, and application code services operate together for tests to run.

cypress/base, to set up the environment in a Dockerfile and only one service in a docker-compose.yml file with our application code to run the Cypress tests. We will go over one way of doing it as there are other Cypress Docker images to use and other ways to set up the Cypress tests in Docker. We encourage you to look at the Cypress documentation for alternative

Dockerfile called Dockerfile.cypress and installed all the node_modules and copied the code over to the image’s working directory in a Node environment. This would be used by our cypress Docker Compose service and we achieved the Dockerfile setup in the following way:

Dockerfile.cypress, we can integrate Cypress to run selected specs against a certain environment API and deployed app through one Docker Compose service called cypress. All we had to do was interpolate some environment variables such as SPECS and BASE_URL to run selected Cypress tests against a certain base URL through the npm run cypress:run:cicd:staging command which looks like this, ”cypress:run:cicd:staging”: “cypress run --record --key --config baseUrl=$BASE_URL --env testEnv=staging” .

docker-compose.cypress.yml file looked similar to this:

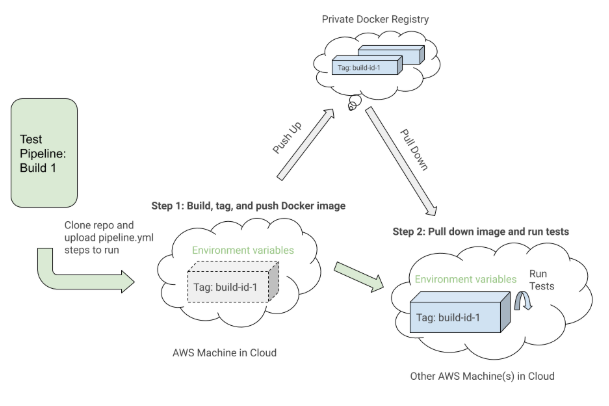

VERSION environment variable which allows us to reference a specific tagged Docker image. We will demonstrate later how we tag a Docker image and then pull down the same Docker image for that build to run against the correct code for the Cypress tests.

BUILDKITE_BUILD_ID passed through, which comes for free along with other Buildkite environment variables for every build we kick off, and the ci-build-id flag. This enables Cypress’s parallelization feature and when we set a certain number of machines allocated for the Cypress tests, it will auto-magically know how to spin up those machines and separate our tests to run across all those machine nodes to optimize and speed up our test run times.

cypress/videos and cypress/screenshots folders for one to review locally and we simply mount those folders and upload them to Buildkite for us as a fail-safe.

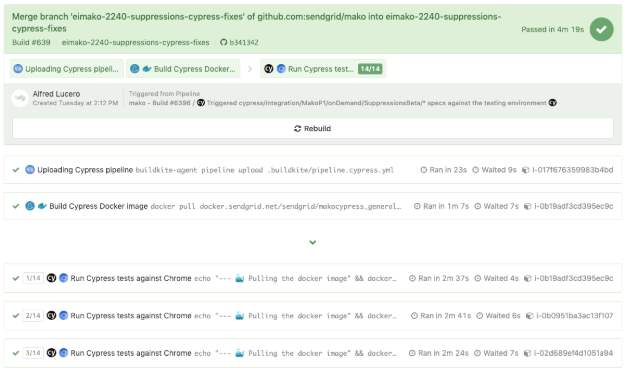

.yml file on our AWS machines with Bash scripts and environment variables set either in the code or through the repo’s Buildkite pipeline settings in the web UI. Buildkite also allowed us to trigger this testing pipeline from our main deploy pipeline with exported environment variables and we would reuse these test steps for other isolated test pipelines that would run on a schedule for our QAs to monitor and look at.

pipeline.cypress.yml file that demonstrates setting up the Docker images in the “Build Cypress Docker Image” step and running the tests in the “Run Cypress tests” step:

build command to build the cypress service with all of the application test code and tagged it with the latest and ${VERSION} environment variable so we can eventually pull down that same image with the proper tag for this build in a future step. Each step may execute on a different machine in the AWS cloud somewhere, so the tags uniquely identify the image for the specific Buildkite run. After tagging the image, we pushed up the latest and version tagged image up to our private Docker registry to be reused.

SPECS and BASE_URL, we would run specific test files against a certain deployed app environment for this specific Buildkite build. These environment variables would be set through the Buildkite pipeline settings or it would be triggered dynamically from a Bash script that would parse a Buildkite select field to determine which test suites to run and against which environment.

pipeline.cypress.yml file to make it happen. An example of triggering the tests after deploying some new code to a feature branch environment from the deploy pipeline looks like this:

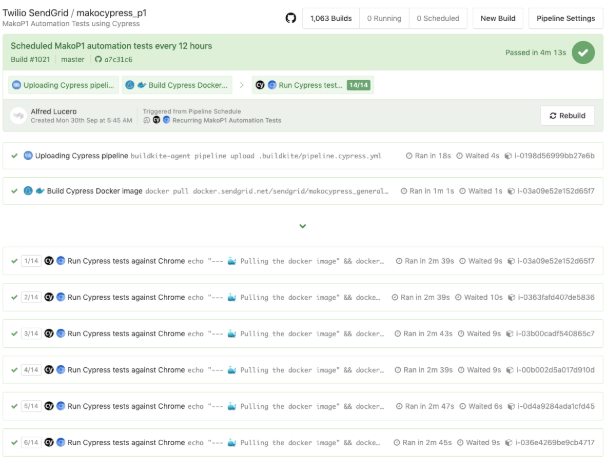

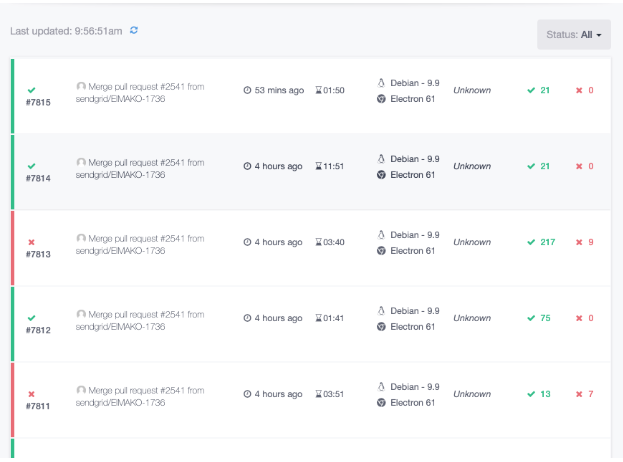

pipeline.cypress.yml file for our dedicated Cypress Buildkite pipelines running on a schedule, we have builds such as the one running our “P1”, highest priority E2E tests, as shown in the photo underneath:

$(.selector).waitForVisible() calls and now rely on cy.get(...) and cy.contains(...) commands with their default timeout. It will automatically keep on retrying to retrieve the DOM elements and if the test demanded a longer timeout, it is also configurable per command. With less worrying about the waiting logic, our tests became way more readable and easier to chain.cy.server() and cy.route().npm install cypress.

Partner with the email service trusted by developers and marketers for time-savings, scalability, and delivery expertise.